Bulk Ingestion

Introduction

This guide provides instructions for re-ingesting historical audit data from VAST audit directory into Easy Auditor's index, using the Bulk Ingest feature. Bulk Ingest ensures that any unprocessed, compressed logs are captured and indexed, allowing Easy Auditor to maintain a complete record of user activity.

Requirements

Before starting the bulk ingestion process, ensure you have:

-

ECA Mounts: An NFS mount is configured on the ECA turboaudit nodes to read audit files. Bulk ingestion uses the same NFS mount that is used for active audit data processing by RSW and EA

-

Active ECA: At least one turboaudit and evtarchive must be running (working ECAs)

-

Software Version: Release 4.2.0 or later

-

GUI Access: Access to the Easy Auditor GUI

-

Storage Space: Sufficient storage space in the sql db server

- No Bulk-Ingest Support: Bulk ingestion is a background task, and it is not supported under the standard support contract. The system prioritizes processing of current audit data over bulk ingestion, meaning there is no way to predict how long bulk ingestion will take.

- Concurrent Jobs: Only one job can run at a time

- Job Queueing: When submitting more than one ingestion job, they will be queued, and only one will execute at a time.

- Date Range: Supports ingestion of audit data from up to 3 days prior

- Non-changeable Priority: There is no option to change the priority of bulk ingestion jobs, meaning they will always be lower priority than active audit data processing.

How to Run a Bulk Ingest Job

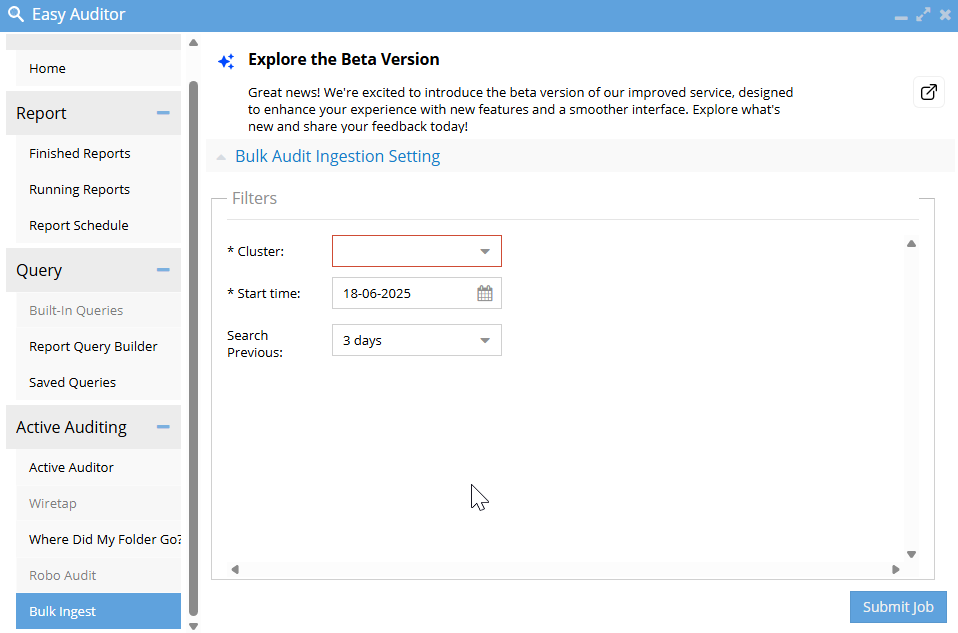

Via Easy Auditor GUI

-

Select Cluster

Choose the target cluster for ingestion

-

Select Date Range

Pick the most recent date (up to 3 days ago) to begin ingesting archived audit logs

-

Submit Job

Click Submit Job to start

Job Steps Overview

-

Start Bulk Ingest Job

Bulkingest job lock acquired, settings are saved and ingestion begins

-

Wait for Completion

Job runs and waits until all files are ingested

-

View Statistics & Completion

Job marks as complete and statistics are updated

noteOnly one job runs at a time. Eyeglass will wait until the current job completes before starting a queued one.

Technical Details and Monitoring

Job Status Flow and ZooKeeper Interaction

Settings are stored in ZooKeeper at:

/ecasettings/bulkingest.json

During ingestion, Eyeglass polls ZooKeeper to check status.

When ingestion finishes:

- Stats are saved to

/ecasettings/bulkingest/stats - Processed files are saved to

/ecasettings/bulkingest/history - A local copy of the final stats is stored at

/opt/superna/sca/data/ecasettings/bulkingest.json

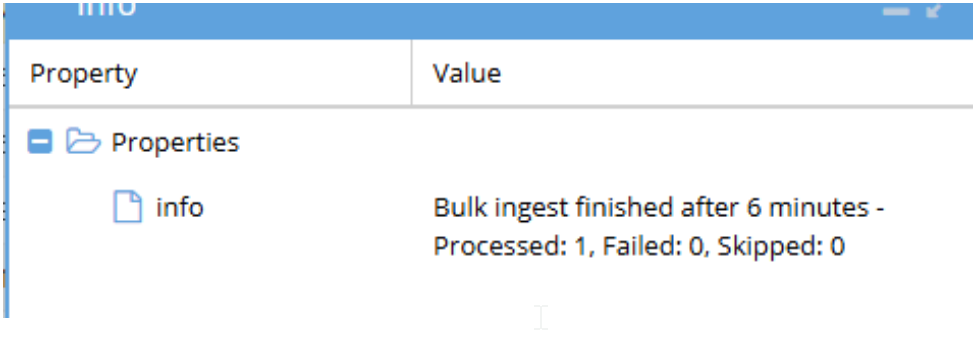

Example stats:

{

"cluster_name": "QA-DS",

"cluster_guid": "2f5fd126-1e30-5a9f-9fe7-329e73a18ec5",

"daySpan": "1",

"startDate": "2025-05-15T00:00:00",

"status": "FINISHED",

"message": "Processed: 1, Failed: 0, Skipped: 0

}

Turboaudit Logs

During the "Wait for Bulk Ingest Completion" step, Turboaudit handles the ingestion.

To check ingestion progress:

- View logs on each Turboaudit node

- Each node may ingest different files in parallel

- Logs include the current file being processed and ingestion status per file

These logs are critical for understanding slowdowns, file-specific issues, or failures during ingestion.

Statistics Tracked

- Number of successfully processed files

- Number of files skipped due to prior processing

- Number of files that failed during processing

How to View Progress

Start the Queue Monitor Process

-

Log in to Node 1

Log in to Node 1 of the ECA cluster

-

Start Kafkahq Container

Run the command:

ecactl containers up -d kafkahq

View Ingestion Jobs

-

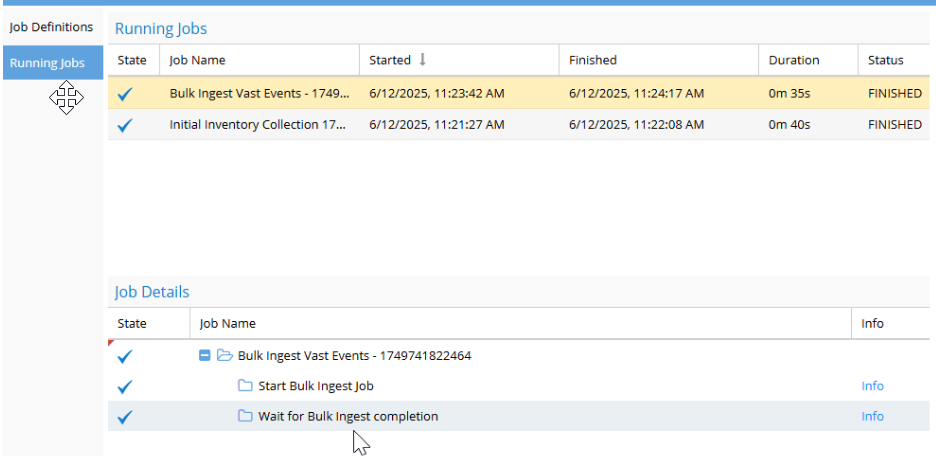

Open Jobs Tab

Open the Eyeglass Jobs Icon → Running Jobs tab

-

Check Completion Status

Wait for the blue checkmark to indicate job completion

-

Monitor Progress

A spinning symbol means the job is still in progress

-

Verify Job Completion

The screen must show "finished" before submitting another job

noteWhen a bulkingest job shows completed in the UI, it means all the events are ingested to bulkingest topic where db consumer group "eventarchive" reads and ingests in the db. UI job showing completion doesn't mean all events are ingested to the db. It has to be confirmed from "View Event Ingestion Progress" kafkahq.

View Event Ingestion Progress

-

Open Browser

Open a Web Browser

-

Access Kafkahq Interface

Navigate to:

http://x.x.x.x/kafkahq(Replace x.x.x.x with the IP address of ECA Cluster Node 1) -

Enter Login Credentials

- Username:

ecadamin - Password:

3y3gl4ss

- Username:

-

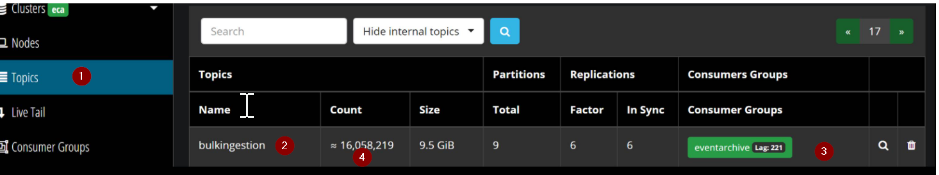

Navigate to Topic

-

Open Topics Section

Go to the Topics section

-

Select Bulkingestion

Select the bulkingestion topic

-

Key Metrics

Lag

- This value fluctuates based on ingestion speed

- A value of 0 indicates that the ingestion job has completed

- To confirm job completion, check the Running Jobs icon in Eyeglass

Count

- This value should always increase as new events are processed

- It reflects the total number of ingested events

- This count will increase with every ingestion job run

Ingestion to the db is complete until eventarchive lag clears. View ECA monitor for evtarchive sent and received events and evtarchive logs for more information on db ingestion.

Advanced Operations

View Data in ZooKeeper

-

Access Node 1

SSH to Node 1

-

Start ZooKeeper Shell

Run:

ecactl zk shell -

View History Data

Inside the shell, run:

get /ecasettings/bulkingest/history

Delete Previous Bulkingest History

-

Access Node 1

SSH to Node 1

-

Start ZooKeeper Shell

Run command:

ecactl zk shell -

Delete History Data

Run command:

delete /ecasettings/bulkingest/history

Manually Stop Bulkingestion Job (Not Recommended)

This should only be done if absolutely necessary. Please also note that restarting SCA or TurboAudit (TA) during a bulk ingest operation does not cancel the job. TurboAudit will automatically resume processing when it comes back online.

-

Stop TurboAudit Services

Stop TA on all nodes

-

Access ZooKeeper

SSH to ECA node 1 and run command:

ecactl zk shell -

Retrieve Current Settings

Retrieve the bulkingest settings information using command:

get /ecasettings/bulkingest.json -

Modify Status Value

Replace the status with "FINISHED" and keep everything else same in single line json

-

Update ZooKeeper Settings

Run command:

set /ecasettings/bulkingest.json <single-line-json> -

Verify Changes

Verify the settings

-

Restart Services

Restart TA on all nodes